11.4 Designing effective questions and questionnaires

Learning Objectives

- Identify the steps to write effective survey questions

- Describe some of the ways that survey questions might confuse respondents and how to overcome that possibility

- Apply mutual exclusivity and exhaustiveness to writing closed-ended questions

- Define fence-sitting and floating

- Describe the steps involved in constructing a well-designed questionnaire

- Discuss why pretesting is important

Up to this point, we’ve considered several general points about surveys. We’ve discussed when to use them, their strengths and weaknesses, the frequency that they are administered, and the different ways that researchers can administer them. This section dives deeper into surveys. We will look at how to pose understandable questions that will yield useable data and how to present those questions on your questionnaire.

Asking effective questions

To write an effective survey question, you must first identify exactly what you would like to know. While it sounds silly to state something so obvious, I cannot stress how easy it is to forget to include important questions when designing a survey. Begin by looking at your research question. Perhaps you wish to identify the factors that contribute to students’ ability to transition from high school to college. To understand which factors shaped successful students’ transitions to college, you’ll need to include questions in your survey about all the possible factors that could contribute. How do you know what to ask? Consulting the literature on the topic will certainly help, but you should also brainstorm on your own and consult with others about what they think may be important in the transition to college. Limitations of time and won’t allow you to include every single item you’ve come up with, so you’ll also need to consider ranking your questions so that you can include only the ones that you feel are most important. Think back to when you operationalized your variables for your study. How did you plan to measure your variables? If you planned to ask specific questions or use a scale, those should be included in your survey.

Although I have stressed the importance of including questions that cover all topics that you feel are important to your research question, I do not recommend taking an “everything-but-the-kitchen-sink” approach. Including every possible question that you think of puts an unnecessary burden on your survey respondents. Remember you’ve asked your respondents to give their time, attention, and care in responding to your questions. Show them your respect by only asking questions that you view as important.

Once you’ve identified all the topics that you’d like to ask questions about, you’ll need to write those questions. This is not the time to show off your creative writing skills. A survey is a technical instrument and should be written in a way that is as direct and concise as possible. As I’ve mentioned earlier, your survey respondents have agreed to give their time and attention to your survey. The best way to show your appreciation for their time is to not waste it. Ensuring that your questions are clear and concise will help show your respondents the gratitude they deserve.

Relatedly, you should additionally make sure that every question you pose will be relevant to every person you ask. This means two things. First, respondents have knowledge about whatever topic you are asking them about. Second, respondents have experience with whatever events, behaviors, or feelings you are asking them to report. For example, you likely wouldn’t ask a sample of 18-year-olds how they would have advised President Reagan to proceed when the world found out that the United States was selling weapons to Iran in the 1980s. For one thing, few 18-year-olds are likely to have a clue about how to advise a president. Furthermore, the 18-year-olds of today were not even alive during Reagan’s presidency, so they have not experienced the Iran-Contra affair. Think back to our example study of the transition to college. Respondents must understand what exactly you mean when you say “transition to college” if you are going to use that phrase in your survey and the respondents must have personally experienced the transition to college.

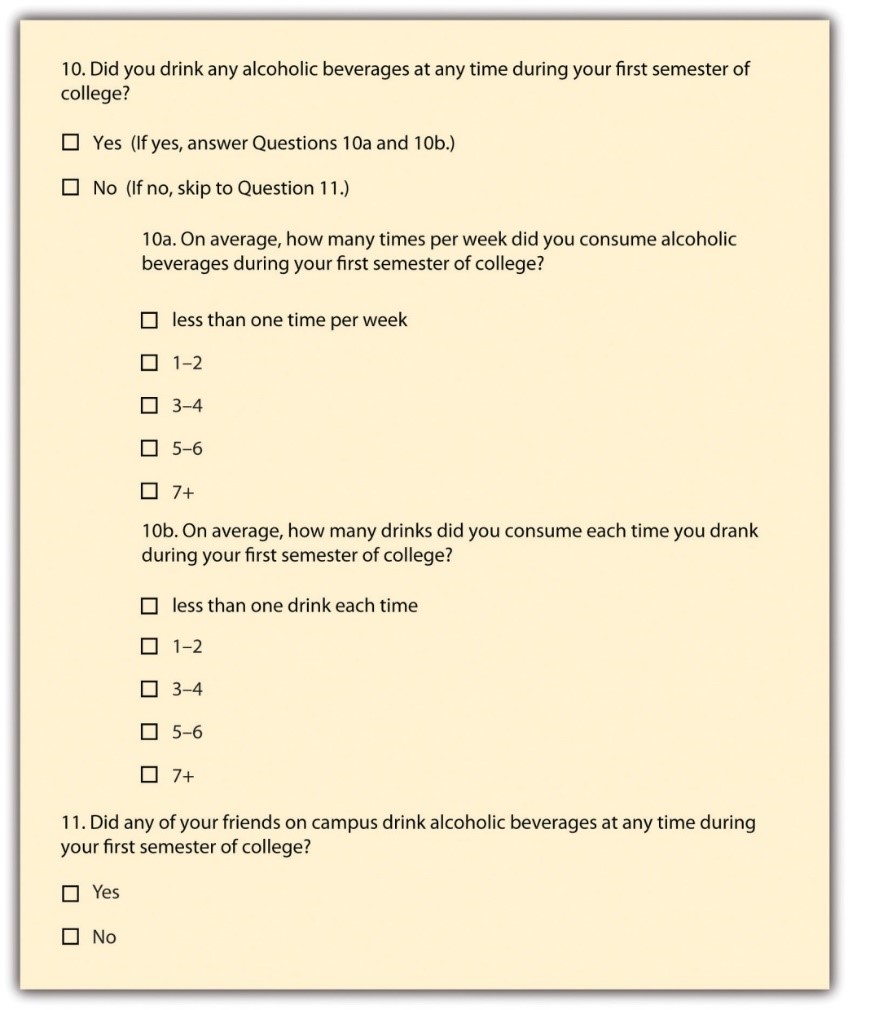

If you decide to pose questions about topics that only a portion of respondents will have experience with, then it may be appropriate to introduce a filter question into your survey. A filter question is designed to identify a subset of survey respondents to be asked additional questions that are not relevant to the entire sample. Perhaps in your survey on the transition to college you want to know whether substance use plays any role in students’ transitions. You may ask students how often they drank during their first semester of college, but this question assumes that all students consume alcohol and it would not make sense to ask nondrinkers how often they drank. Drinking frequency can certainly impact someone’s transition to college, so it is worth asking this question even if may not be relevant for some respondents. This is just the sort of instance when a filter question would be appropriate. You may pose the question as it is presented in Figure 11.1.

Sometimes, researchers may ask a question in a way that confuses many survey respondents. Survey researchers should take great care to avoid using questions that pose double negatives, contain confusing or culturally specific terms, or ask more than one question but are posed as a single question. Any time respondents are forced to decipher questions that use double negatives, confusion is bound to ensue. In the previous example where we posed questions about drinking, what if we had asked respondents, “Did you not abstain from drinking during your first semester of college?” This example is obvious, but it demonstrates that survey researchers must careful to word their questions so that respondents are not asked to decipher double negatives. In general, avoiding negative terms in your question wording will help to increase respondent understanding.

Additionally, you should avoid using terms or phrases that may be regionally or culturally specific unless you are certain that all respondents come from the region or culture whose terms you are using. For example, think about the word “holler.” Where I grew up in New Jersey, “holler” is an uncommon synonym for “yell.” When I first moved to my home in southwest Virginia, I did not know what they meant when they were talking about “hollers.” I came to learn that Virginians use the term “holler” to describe a small valley in between mountains. If I used holler in that way on my survey, people who live near me may understand, but almost everyone else would be totally confused.

Similar issues arise when surveys contain jargon, or technical language, that people do not commonly understand. For example, if you asked adolescents how they experience imaginary audience, they likely would not be able to link that term to the concepts from David Elkind’s theory. [2] The questions on your study must be understandable to the participants. Instead of using the jargon term, you would need to break it down into language that is easier to understand for your respondents.

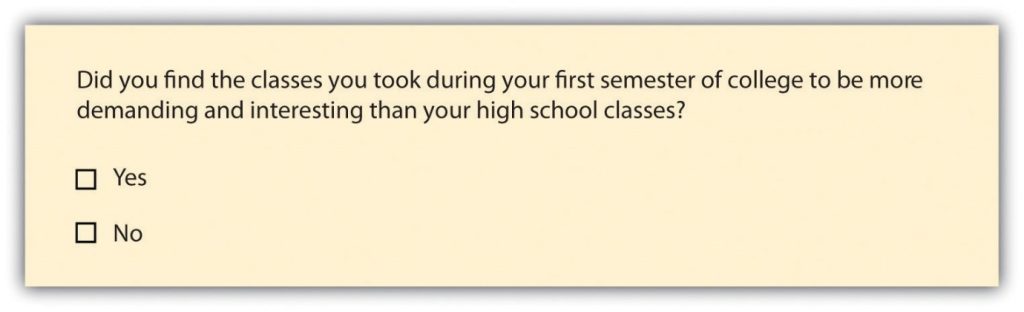

Asking multiple questions as though they are a single question can also confuse survey respondents. These types of questions are known as double-barreled questions. Using our example of the transition to college, Figure 11.2 shows a double-barreled question.

Do you see what makes the question double-barreled? If a respondent felt that their college classes were more demanding but less interesting than their high school classes, they may have trouble responding to this question. The question combines “demanding” and “interesting,” so there is no way to respond yes to one criterion but no to the other.

When constructing survey questions, it is also important to minimize the potential problem of social desirability. It is a part of human nature to want to look good and present ourselves in an agreeable manner. In addition, we probably know the politically correct response to a variety of questions, whether we agree or disagree with those responses. In survey research, social desirability refers to the idea that respondents will try to answer questions in a way that will present them in a favorable light. (You may recall we covered social desirability bias in Chapter 9.) Let’s go back to our example about transitioning to college to explore this concept further.

Perhaps we decide that we can understand the transition to college by asking respondents if they have ever cheated on an exam in high school or college. We all know that cheating on exams is generally frowned upon (at least I hope we all know this), so it may be difficult to get people to admit to cheating on a survey. If you can guarantee respondents’ confidentiality or their anonymity, they are more likely to answer honestly about having engaged in this socially undesirable behavior. Another way to avoid problems of social desirability is to phrase difficult questions in the most benign way possible. Earl Babbie (2010) [3] offers a useful suggestion for helping you do this—simply imagine how you would feel responding to your survey questions. If you would be uncomfortable, chances are others would as well.

Finally, it is important to get feedback on your survey questions from as many people as possible, especially people who are like those in your sample. Now is not the time to be shy- ask for help! Recruit your friends, mentors, family, and even professors to look over your survey and provide constructive feedback. The more feedback you receive, the better your chances of developing questions that a wide variety of people, including those in your sample, can understand.

To pose effective survey questions, researchers should do the following:

- Identify what they would like to know

- Keep questions clear and succinct

- Make questions relevant to respondents

- Use filter questions when necessary

- Avoid questions that may confuse respondents.

- Avoid double negatives, culturally specific terms, and jargon

- Avoid posing more than one question at a time (double-barreled questions)

- Imagine how respondents would feel responding to questions

- Get feedback, especially from people who resemble your sample population

Response options

While it is important to pose clear and understandable survey questions, it is also imperative to provide respondents with unambiguous response options. Response options are the answers that you provide to the people taking your survey. Generally, respondents will be asked to choose a single (or best) response to each question you pose, though sometimes it makes sense to instruct respondents to choose multiple response options. Keep in mind that accepting multiple responses to a single question may add complexity when tallying and analyzing your survey results.

Offering response options assumes that your questions will be closed-ended questions. In a quantitative written survey, which is the type of survey we’ve been discussing here, chances are good that your questions will be closed-ended. This means that you, the researcher, will provide respondents with a limited set of options for their responses. There are guidelines to write an effective closed-ended question. First, be sure that your response options are mutually exclusive. Look back at Figure 11.1, which contains questions about how often and how many drinks respondents consumed. Do you notice that there are no overlapping categories in the response options for these questions? This aspect of question construction seems obvious, but it can be easily overlooked. Response options should also be exhaustive, which means that every possible response should be covered. In Figure 11.1, 10a is an excellent example of an exhaustive question. Those who drank an average of once per month can choose the first response option (“less than one time per week”), while those who drank multiple times a day each day of the week can choose the last response option (“7+”). All the possibilities in between these two extremes are covered by the middle three response options.

Surveys need not be limited to closed-ended questions. Sometimes survey researchers include open-ended questions in their survey instruments as a way to gather additional details from respondents. In an open-ended question, respondents are asked to reply to the question in their own way, using their own words. These questions are generally used to gather more information about participant’s experiences or feelings about a topic. For example, if a survey includes closed-ended questions asking respondents to report on their involvement in extracurricular activities during college, an open-ended question could ask respondents why they participated in those activities or what they gained from their participation. While these types of responses may also be captured using a closed-ended format, allowing participants to share information using their own words can make for a more satisfying survey experience. Open-ended questions can also reveal new motivations or explanations that had not occurred to the researcher.

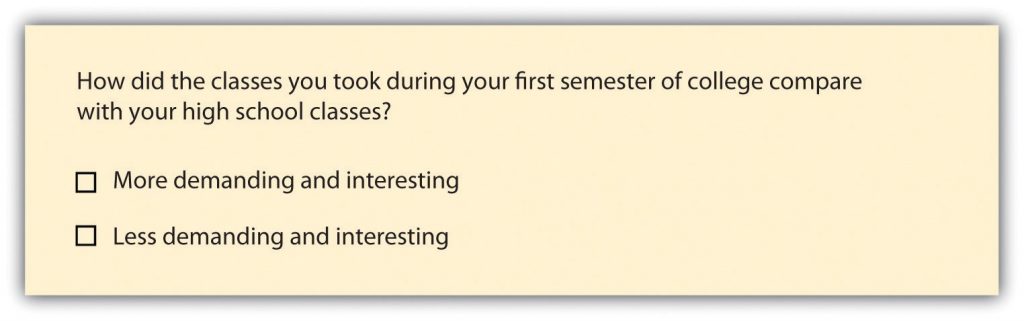

Earlier in this section, we discussed issues with double-barreled questions. Response options can also be double barreled, and this should be avoided. Figure 11.3 is an example of a question that uses double-barreled response options.

Other things to avoid when it comes to response options include fence-sitting and floating. Fence-sitters are respondents who choose neutral response options, even if they have an opinion. This can potentially occur if respondents are given rank-ordered response options, such as strongly agree, agree, no opinion, disagree, and strongly disagree. You’ll remember this is called a Likert scale. Some people will be drawn to choose “no opinion” even if they have an opinion, particularly if their true opinion is the not socially desirable. Floaters, on the other hand, are respondents that choose a substantive answer to a question when they don’t understand the question or don’t have an opinion. If a respondent is only given four rank-ordered response options, such as strongly agree, agree, disagree, and strongly disagree, those who have no opinion have no choice but to select a response that suggests they have an opinion.

As you can see, floating is the flip side of fence-sitting, so the solution to one problem is often the cause of the other. How you decide which approach to take depends on the goals of your research. Sometimes researchers specifically want to learn something about people who claim to have no opinion. In this case, allowing for fence-sitting would be necessary. Other times, researchers feel confident that their respondents will be familiar with every topic in your survey. In this case, perhaps it is okay to force respondents to choose an opinion. There is no always-correct solution to either problem.

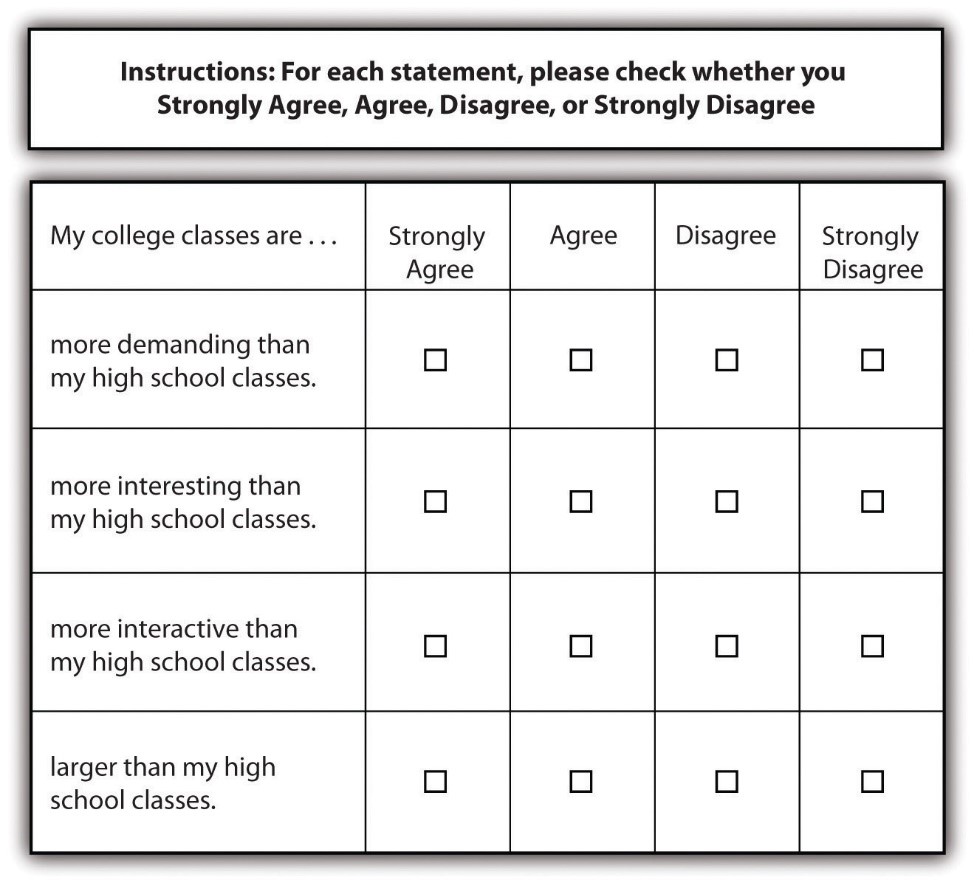

Finally, using a matrix can help to streamline response options. A matrix categorizes questions and lists them based on answer category. If you have a set of questions that have the same response option, it may make sense to create a matrix rather than posing each question and its response options individually. Not only will this save you some space in your survey but it will also help respondents progress through your survey more easily. A sample matrix can be seen in Figure 11.4.

Designing questionnaires

In addition to constructing quality questions and posing clear response options, you’ll also need to think about how to present your written questions and response options to survey respondents. A questionnaire is the document (either hard copy or online) that respondents will be given to read your questions and provide their responses. Designing questionnaires takes some thought.

Once you’ve come up with a set of survey questions you feel confident about, the next step is to group them by theme. In our example of the transition to college, perhaps we’d have a few questions about study habits, a few that are focused on friendships, and still others about exercise and eating habits. Those may be the themes that we organize our questions around, or we may want to present questions about pre-college life and then present a series of questions about life after beginning college. The point here is to be deliberate about how you present your questions to respondents.

Once you have grouped similar questions together, you’ll need to think about the order in which to present those question groups. Most survey researchers agree that it is best to begin a survey with questions that will want to make respondents continue (Babbie, 2010; Dillman, 2000; Neuman, 2003). [5] In other words, we do not want to immediately bore respondents, but we also do not want to overwhelm them and scare them away. Additionally, there is some disagreement about where demographic questions regarding age, gender, and race should be placed on questionnaires. On the one hand, placing them at the beginning of the questionnaire may lead respondents to think the survey is boring, unimportant, and not something they will bother completing. On the other hand, if your survey deals with a sensitive or difficult topic like child sexual abuse or criminal activity, you don’t want to scare respondents away or shock them by beginning with your most intrusive questions.

Truthfully, the order in which you present your survey questions is best determined by the unique characteristics of your research. Only you (the researcher) can integrate feedback to determine the best way to order your questions. To do so, think about the unique characteristics of your topic, your questions, and most importantly, your sample. Being mindful of the characteristics and needs of the respondent population should help guide you as you determine the most appropriate order in which to present your questions.

You’ll also need to consider the time it will take respondents to complete your questionnaire. Surveys vary in length, from a few pages to a dozen or more pages, which means they also vary in the time it takes to complete them. The length of your survey depends on several factors. First, what is it that you wish to know? If you want to understand how grades vary by gender and school year, then you will certainly pose fewer questions than if you wanted to know how college experiences are shaped by demographics, college characteristics, housing situation, family background, college major, social networks, and extracurricular activities. Even if your overall research question requires a sizable number of survey questions to be included in your questionnaire, try keep the questionnaire as brief as possible. If your respondents think you’ve included useless questions just for the sake of it, then they may not want to complete your survey.

Second, and perhaps more important, how much time are respondents willing to spend completing your questionnaire? College students have limited free time outside of their studies, so they may not spend more than a few minutes completing your survey. However, if you are endorsed by a professor who will allow you to administer your survey during class, students may be willing to give you a little more time (though perhaps the professor will not). The amount of time that survey researchers ask respondents to spend on questionnaires varies greatly. Some researchers advise that surveys should not take longer than about 15 minutes to complete (as cited in Babbie 2010), [4] whereas others suggest that up to 20 minutes is acceptable (Hopper, 2010). [5] As with question order, there is no clear-cut, universal guideline questionnaire length. The unique characteristics of your study and your sample should be considered to determine how long to make your questionnaire.

A good way to estimate the time it will take respondents to complete your questionnaire is through pretesting. Pretesting allows you to gain feedback on your questionnaire so you can make improvements before you administer it. Pretesting can be quite expensive and time consuming if you wish to test your questionnaire on a large sample of people who very much resemble the sample to whom you will eventually administer the finalized version of your questionnaire. However, you can gain insight and make great improvements to your questionnaire simply by pretesting with a small number of accessible people, perhaps a few friends who owe you a favor. Pretesting your questionnaire is beneficial because you can see if your questions are easy to understand, get feedback on question wording and order, find out if any questions are boring or offensive, and you can learn where filter questions could have been included. You can also time pretesters as they take your survey. This will give you a good idea about the estimate to provide respondents when you administer your survey and whether you have some wiggle room to add additional items or need to cut a few items.

Perhaps this goes without saying, but your questionnaire should also have an attractive design. A messy presentation style can confuse respondents or, at the very least, annoy them. Be brief, to the point, and as clear as possible. Avoid cramming too much into a single page and make your font size readable. I suggest using a font that is at least 12 point or larger, depending on the characteristics of your sample. Additionally, try to leave a reasonable amount of space between items and make sure all instructions are exceptionally clear. Think about books, documents, articles, or web pages that you have read. Which were relatively easy to read? Which were easy on the eyes and why? Try to mimic those features in the presentation of your survey questions.

Key Takeaways

- Brainstorming and consulting the literature are important steps during the early stages of preparing to write effective survey questions.

- Make sure your survey questions will be relevant to all respondents and that you use filter questions when necessary.

- Getting feedback on your survey questions is a crucial step in the process of designing a survey.

- When choosing response options, keep in mind that the problems of fence-sitting and floating are interrelated: the solution to one problem may be the cause of the other.

- Pretesting is an important step to improve a survey before administering the finalized version.

Glossary

Closed-ended question– question for which the researcher offers response options

Double-barreled question– a question that asks two different questions at the same time, making it difficult to respond accurately

Fence-sitters– respondents who choose neutral response options, even if they have an opinion

Filter question– question that identifies some subset of survey respondents who are asked additional questions that are not relevant to the entire sample

Floaters– respondents that choose a substantive answer to a question when they don’t understand the question or don’t have an opinion

Matrix question– lists questions in sets based on answer category or response option

Open-ended questions– questions for which the researcher does not include response options, allowing for respondents to answer the question in their own words

- All figures in this chapter were copied from Blackstone, A. (2012) Principles of sociological inquiry: Qualitative and quantitative methods. Saylor Foundation. Retrieved from: https://saylordotorg.github.io/text_principles-of-sociological-inquiry-qualitative-and-quantitative-methods/ Shared under CC-BY-NC-SA 3.0 License (https://creativecommons.org/licenses/by-nc-sa/3.0/) ↵

- See https://en.wikipedia.org/wiki/Imaginary_audience for more information on the theory of imaginary audience. ↵

- Babbie, E. (2010). The practice of social research (12th ed.). Belmont, CA: Wadsworth. ↵

- All figures in this chapter were copied from Blackstone, A. (2012) Principles of sociological inquiry: Qualitative and quantitative methods. Saylor Foundation. Retrieved from: https://saylordotorg.github.io/text_principles-of-sociological-inquiry-qualitative-and-quantitative-methods/ Shared under CC-BY-NC-SA 3.0 License (https://creativecommons.org/licenses/by-nc-sa/3.0/) ↵

- Babbie, E. (2010). The practice of social research (12th ed.). Belmont, CA: Wadsworth; Dillman, D. A. (2000). Mail and Internet surveys: The tailored design method (2nd ed.). New York, NY: Wiley; Neuman, W. L. (2003). Social research methods: Qualitative and quantitative approaches (5th ed.). Boston, MA: Pearson. ↵